Nvidia - unsigned integer issues

Yesterday I experienced another bunch of fun while using my laptop. My normal working environment is a desktop with an AMD graphics card, while choosing a laptop it was important for me to get some Nvidia GPU so I could test code on both machines. This was a smart decision as there are so many differences between them. This time I encounter another one.

History

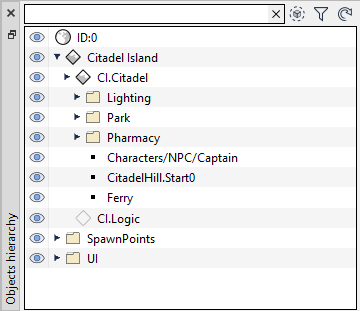

Like always a little bit of the background: while doing tooling you need to think about nice interactive objects selections. The beginning was rough as I decided to use a physics ray-trace system to execute picking. This was a simple system where I was sanding a ray thru the world and tried to pick objects that collide with it.

The issue was:

- every object needed to have a collision body

- it did not work very well with objects that had an alpha mask

- did not support geometry that was deformed in shaders.

With a little bit of struggle with retrieving data from GPU, I finally had a working system. It resolved all the problems that I had with the old one and added a few new ones. This was:

- filling this new GBuffer in the shader,

- retrieving data on CPU,

- the issue with selecting objects that are behind the selected objects (I just do not do this).

Thanks to this system I also could do a rendering of selection. I just sacrificed one bit of ID and used it as info if the object is selected or not. This way I can highlight it nicely using this shader code:

uvec4 pix = texelFetch(texMrt1, ivec2(gl_FragCoord.xy), 0);

if (pix & (1<<31) == 0)

discard;

return vec4(1.0, 1.0, 0.0 ,0.5);

and that worked nice:

Sadly it is pretty visually obstructive, so I decided to improve it and add an outline. This worked well and it was super easy. I just compare if neighbor values are the same as the ones we testing and if they are not rendered more intense color.

Problem

This is the place where the real story starts. The above screenshot is taken from AMD Radeon R9 390 where everything looks great. On other hand my laptop with Nvidia GTX 1660 Ti gave me a super weird result:Because it is always easier to investigate stuff on a smaller scale I decided to do scanning only by testing one direction.

if (pix & (1<<31) == 0)

discard;

uvec4 pixLeft = texelFetch(texMrt1, ivec2(gl_FragCoord.xy)-ivec2(1,0), 0);

if (pix.x != pixLeft.x)

return vec4(1.0, 1.0, 0.0 ,0.5);

This still resulted in the same effect:

Now the weird part is that if I replace if (pix.x != pixLeft.x)

with

if (pix.x < pixLeft.x)we get:

Which is stable, but then when I replace it with :

I'm not really happy about it but this is a visual thing to which I can return back to when I will have some spare time, which right now I do not have.

Comments

Post a Comment